Implicit Skinning: Real-Time Skin Deformation with Contact Modeling

Publication: ACM SIGGRAPH, 2013

Rodolphe Vaillant1,2, Loïc Barthe1, Gaël Guennebaud3, Marie-Paule Cani4, Damien Rhomer5, Brian Wyvill2, Olivier Gourmel1 and Mathias Paulin1

1IRIT - Université de Toulouse,

2University of Victoria, 3Inria Bordeaux,

4LJK - Grenoble Universités - Inria,

5CPE Lyon - Inria

|

|

|

(e) |

| (a) | (b) | ||

|

|

|

|

| (c) | (d) | ||

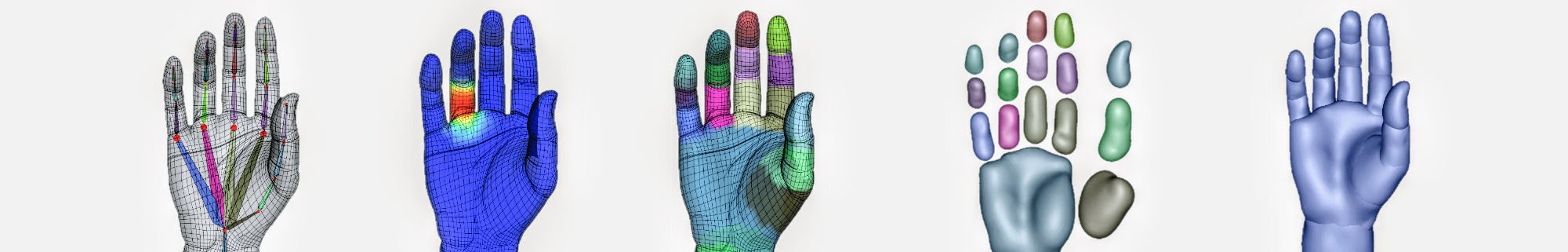

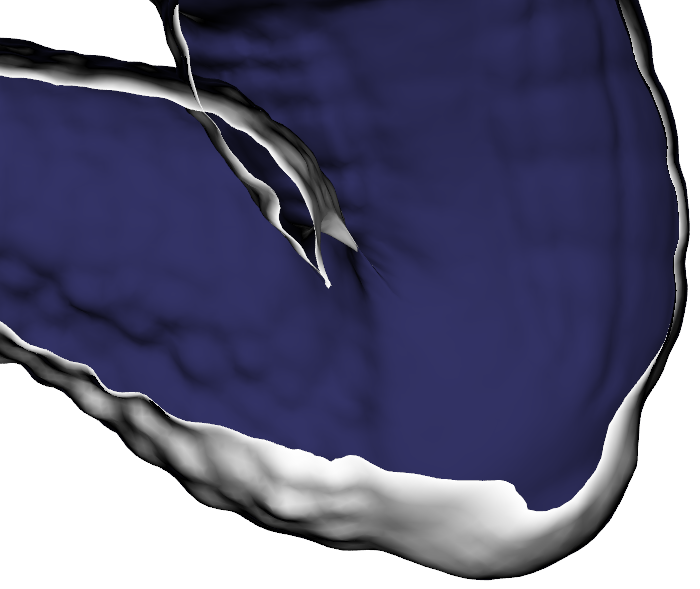

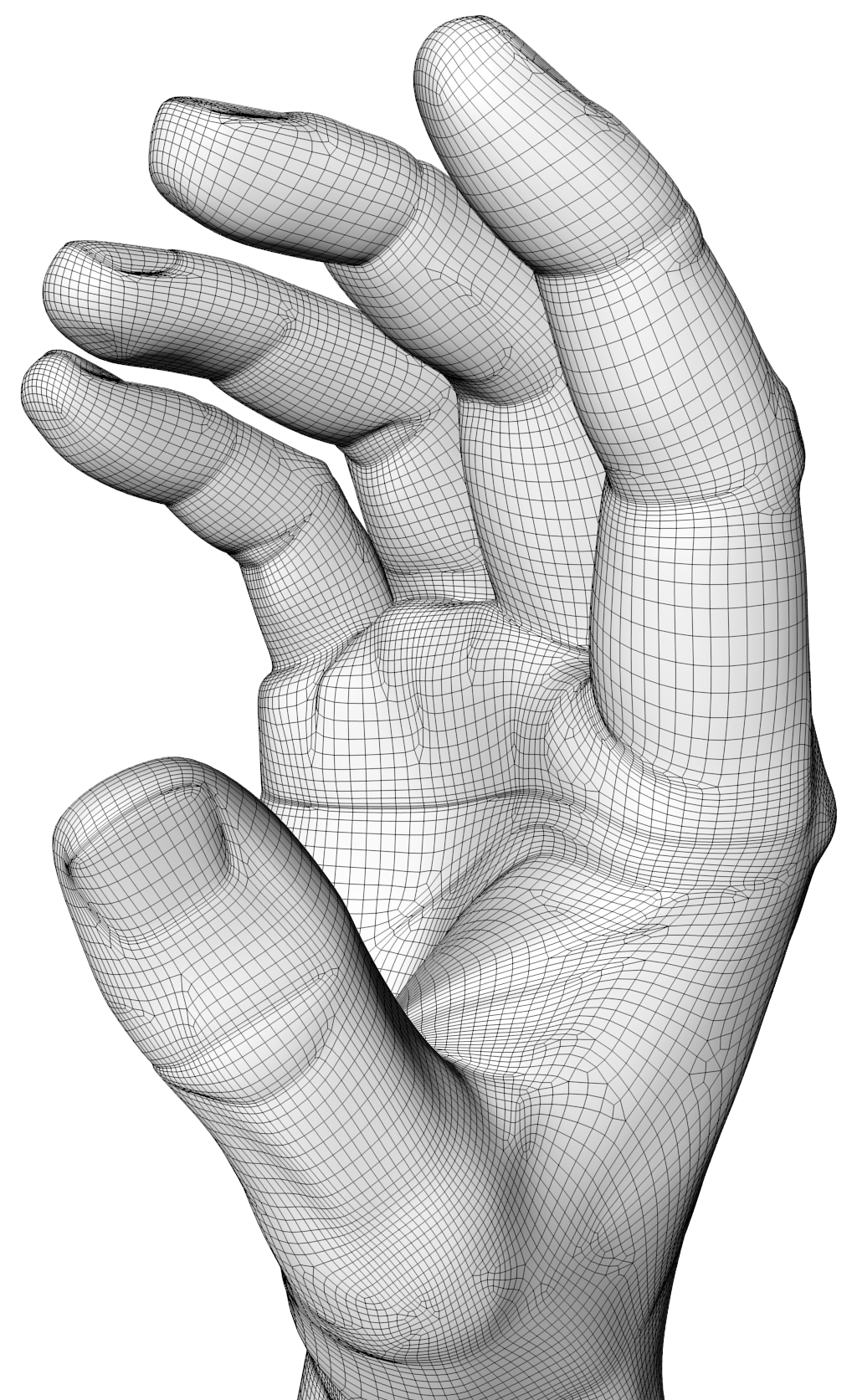

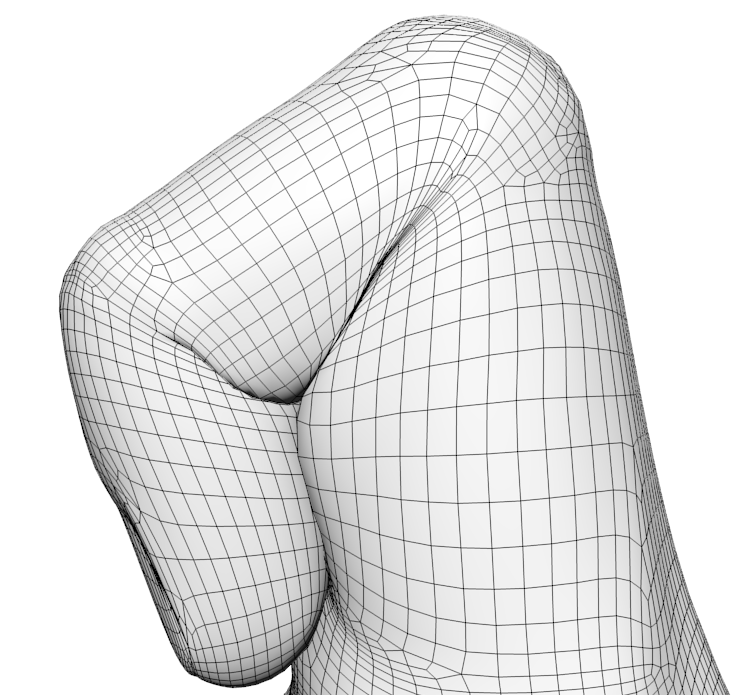

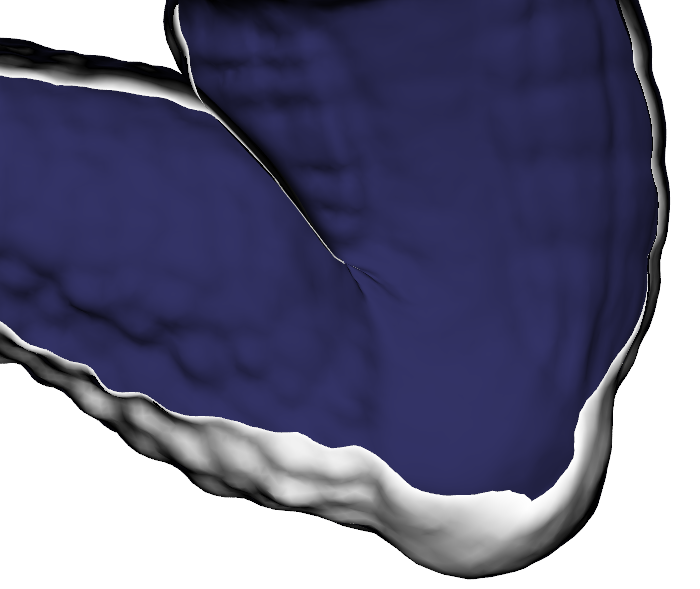

| (a) Self-intersection of the Armadillo's knee with dual quaternions skinning. (b) Implicit Skinning produces the skin fold and contact. (c), (d) two poses of the finger with Implicit Skinning generating the contact surface and organic bugle. (e) Implicit Skinning of an animated character around 95fps. | |||

Summary

We present Implicit Skinning a real time method for character skinning. The technique is a post process applied over a geometric skinning (such as linear blending or dual-quaternions) which handles the self-collisions of the limbs by producing skin contact effects and plausible organic bulges in real-time. In addition we avoid the usual collapsing or bulging artifacts seen with linear blending or dual quaternions at the skeleton's joint.

To achieve these effects we need a volumetric representation of the mesh. Implicit Skinning's idea is to use a set of implicit surfaces (3D distance-fields/scalar-fields), rather than tetrahedral/voxel mesh and a slow physical simulation. For each limb, we automatically generate the implicit surface shape, and use it to adjust the position of the geometric skinning's vertices without loss of details.

As Implicit Skinning acts as a post-process, it fits well into the standard animation pipeline. Moreover, it requires no intensive computation step such as an explicit collision detection between triangles, and therefore provides real-time performance.

[ ![]() Paper 19.7MB ]

Paper 19.7MB ]

Video

[ ![]() Video file 45.3MB ] [

Video file 45.3MB ] [ ![]() captions ] [

captions ] [ ![]() Play on YouTube ]

Play on YouTube ]

Presentation

[ ![]() Talk slides ] (ppt 2013 and script embedded in notes)

Talk slides ] (ppt 2013 and script embedded in notes)

Figures

Need to use some figures from our paper? go ahead:

Acknowledgments

The Implicit Skinning project has been partially funded by the IM&M project (ANR-11-JS02-007) and the advanced grant EXPRESSIVE from the European Research council. Partial funding also comes from the Natural Sciences and Engineering Research Council of Canada, the GRAND NCE, Canada and Intel Corps. Finally, this work received partial support from the Royal Society Wolfson Research Merit Award.

We thank artists, companies and universities who provided us with nice 3D models. Juna model comes from Rogério Perdiz. Dana and Carl models from the company MIXAMO. Finally the famous armadillo model comes from the Standford university 3D scan repository.

We also thank the blender foundation for providing everyone with the Blender software which we used to do some of the enhanced rendering in the video. Rendering setup is a modified version of the Sintel Lite rendering setup.

google links: Implicit Skinning project Implicit Skinning on blender 1 Implicit Skinning at SIGGRAPH 2013 Implicit Skinning artist debate Implicit Skinning raise Ton Roosendaal interest Implicit Skinning requested in 3d Studio Max

No comments

Donate

Donate